The modern data-based environment workloads (AI models training and real-time predictive analytics) are incomparable in relation to their demand on the computational power. The traditional server systems are prone to being slow lagging which leads to congestions, long processing time and high costs of operation. This is one of the sources of inefficiency among businesses within the financial, manufacturing, and energy sector, where the speed and the accuracy of businesses are two criteria that directly determine the competitiveness of businesses. Introduce Open Accelerator Module (OAM) GPU servers: this is a specifically developed product and Aethlumis leverages its close relationships with the industry giants like HPE, Dell and Huawei to deliver these state of the art components to the market.

The weakness of the traditional GPU server designs.

Before proceeding in order to reap the advantages of OAM, it should be known why standard GPU servers are not applicable in AI workloads. The traditional architectures that usually use PCIe-based graphics cards have some innate constraints such as, limited bandwidth between the graphics cards and the central processing units, rigid form factor that makes it difficult to scale and poor thermal performance during operations with high intensity.

Significant Advantages of OAM GPU Servers to the Productivity of AI.

OAM GPU servers bridge these gaps with the innovative design, which is based on three fundamental pillars that encourage the efficiency:

High bandwidth interconnects to fasten data movement.

OAM employs current-generation interconnect technologies (i.e. NVLink and OpenCAPI) to bypass the PCIe bus limitations, enabling high-speed point-to-point communication between GPUs and other chips. It translates to significantly reduced data transfer latency which is highly crucial to AI load that relies on the transfer of extensive datasets between processing units. Using the example of a deep learning model that is trained using image data, it may be 30-40 percent faster on OAM servers in which there are no access point bottlenecks on training data by GPUs. By combining OAM and hardware vendors provided by HPE, Dell, and Huawei, Aethlumis will ensure that such interconnects are very compatible to each other, and compatibility problems, which tend to make an off-the-shelf solution problematic, will be eliminated.

On-demand Scalability to Workload Requirements.

The projects of AI are not terminated--companies can initiate with testing a small model and grow to the coverage of the whole organisation during the night. The OAM modular design allows organizations to expand the server infrastructure by adding and upgrading GPUs, memory, and storage without necessarily doing a full upgrade. Such a leeway means that companies can only invest in the resources that they need at this time and can even be able to scale up in case the workloads of AI processes rise. It is game changing to energy sector clients that must use grid data to manage the distribution of renewable energy: they can start with a small OAM architecture and scale it with the expansion of their data collection without overproviding it and incurring minimal expenses at first.

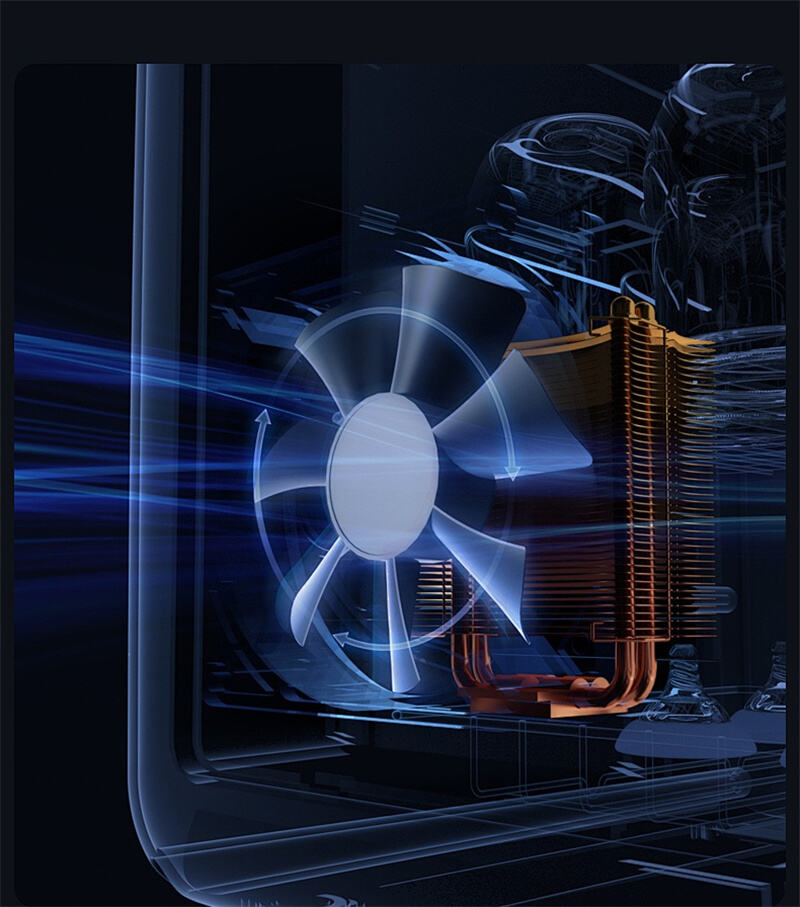

Power and Thermal Optimized Consumption.

The loaded AI creates a high load to the server that generates much heat that can interfere with the performance and use more energy. OAM servers can easily be thermally controlled including enhanced airflow design and direct liquid cooling, keeping components at ideal temperatures. Not only does it increase reliability but it reduces the power usage: the OAM solutions developed by Aethlumis as an embodiment of green tech require up to 25 percent of the power that a traditional server with a graphics card would require to accomplish the same AI task. It will imply that in the case of manufacturing plants owned by 24/7 AI quality control systems, the utility bill will be minimized, and carbon footprint will be minimized.

Industry-Specific Effect: Industry-specific OAM Solutions by Aethlumis.

The fact that Aethlumis has experience in the sphere of serving finance, manufacturing and energy industries is sufficient to ensure that OAM GPU servers are not only highly technical, but also industry specific:

Finance: OAM has low latency to facilitate the processing of market data in real-time to support algorithmic trading, risk modeling, and other applications, to help companies make decisions in split-second decision-making without breaking regulatory requirements. Safe integration of Aethlumis ensures that the confidential financial data is not lost in the course of AI computations.

Manufacturing: AIs in manufacturing can be predictive maintenance and optimization of production, which relies on the processing of high volumes of sensor data. The OAM servers reduced the data processing hours to few minutes that allowed manufacturers to reduce downtimes and throughput to the maximum and this was facilitated by the timely technical support by Aethlumis to fix any issue with the operation.

Energy: The operations of grid optimization and forecasts of renewable energy imply the work with the variable and massive volumes of data. Scalable characteristic of OAM enables energy companies to manage the variations in the data loads, whilst its efficiency enables energy to be green, which is increasingly worried about green technology.

Summary: OAM GPU the Springboard to AI Success.

OAM GPU servers in the world where the effectiveness of AI directly depends on the responsiveness of the business is a transformational solution. They offer the solution to unlocking the potential of AI projects in organizations by addressing their bandwidth, scalability, and power concerns. Aethlumis has also partnered with HPE, Dell and Huawei to ensure that these high quality servers are offered with reliability, security and technical support that might be needed by the clients in the finance, manufacturing and energy sectors. The OAM GPU servers are not just a hardware upgrade because the AI workloads are becoming increasingly advanced, but it is an investment into the future of computational efficiency.

Table of Contents

- The weakness of the traditional GPU server designs.

- High bandwidth interconnects to fasten data movement.

- On-demand Scalability to Workload Requirements.

- Power and Thermal Optimized Consumption.

- Industry-Specific Effect: Industry-specific OAM Solutions by Aethlumis.

- Summary: OAM GPU the Springboard to AI Success.